Value Iteration, Policy Iteration & Truncated Iteration

The idea of interaction between value and policy update (generalized policy iteration) widely exists in Reinforcement Learning. This is also Dynamic Programming. These algorithm is also Model Required.

Value Iteration

Solving the Bellman Optimality Equation through using contraction mapping theorem

$$

v_{k+1} = \max_{\pi \in \Pi}(r_\pi + \gamma P_\pi v_k), \quad k = 0, 1, 2, \dots

$$

This will guarantee the convergence of optimal state value and policy, according to the contract mapping theorem.

Step 1: Policy Update

$$

\pi_{k+1} = arg \max_\pi (r_\pi + \gamma P_\pi v_\pi)

$$

Step2: Value Update

$$

v_{k+1}=r_{\pi_{k+1}} + \gamma P_{\pi_{k+1}}v_k

$$

Elementwise Form Analysis

First, find the best policy:

$$

\pi_{k+1}(s)= arg\max_\pi\sum_{a\in \mathcal{A}}\pi(a|s)[\sum_{r\in \mathcal{R}}p(r|s, a)r+ \gamma \sum_{s’\in \mathcal{S}}v_\pi(s’)p(s’|s, a)], \quad s\in \mathcal{S}

$$

Second, update the state value:

$$

v_{k+1} = \sum_{a\in \mathcal{A}}\pi(a|s)[\sum_{r\in \mathcal{R}}p(r|s, a)r+ \gamma\sum_{s’\in \mathcal{S}}v_\pi(s’)p(s’|s, a)], \quad s\in \mathcal{S}

$$

$a^* = arg \max_a q_k(s, a)$, We have

$$ \pi(a|s) = \begin{cases} 1, \quad a = a^*\\0, \quad a \neq a^*\end{cases} $$Thus, the optimal state value after updating is

$$

v_{k+1}(s) = \max_a q_k(s, a)

$$

where the $v_k, v_{k+1}$ are only the intermediate value generated in the process of algorithm.

Algorithm

Initialization: The probability models $p(r\mid s,a)$ and $p(s’\mid s,a)$ for all $(s,a)$ are known.

Goal: Search the optimal state values and an optimal policy that satisfy the Bellman optimality equation.

Loop: While $\lVert v_k - v_{k+1} \rVert_\infty \ge \varepsilon$ (iteration $k$):

For every state $s \in \mathcal{S}$:

For every action $a \in \mathcal{A}$:

- Step 1: $$ q_k(s,a) = \sum_{r\in\mathcal{R}} p(r\mid s,a)\, r + \gamma \sum_{s'\in\mathcal{S}} p(s'\mid s,a)\, v_k(s') $$

Step 2: Choose greedy action

$$

a_k^*(s) = \arg\max_a q_k(s,a)

$$Step 3: Policy update

$$ \pi_{k+1}(a\mid s) = \begin{cases} 1, & a = a_k^*(s),\\ 0, & a \ne a_k^*(s) \end{cases} $$Step 4: Value update

$$

v_{k+1}(s) = \max_a q_k(s,a)

$$

Policy Iteration

Indirectly solving the Bellman Equation. It’s the foundation of Monte Carlo (MC). It has two parts, policy evaluation and policy improvement.

Step1: Policy Evaluation.

This step will calculate the state value and evaluate the policy.

$$

v_{\pi_k} = r_{\pi_k} + \gamma P_{\pi_k}v_{\pi_k}

$$

where the $r_{\pi_k}, P_{\pi_k}$ can be obtained through system models, $\pi_k$ is the policy obtained in the last iteration and $v_{\pi_k}$ is the state value to be calculated in this iteration.

Step2: Policy Improvement.

once $v_

{\pi_k}$ is calculated at this first step, we can update the policy $\pi_{k+1}$ through.

$$

\pi_{k+1} = arg\max_\pi(r_\pi + \gamma P_\pi v_{\pi_k})

$$

How to obtain $v_{\pi_k}$ 🤔?

To calculate $v_{\pi_k}$, we need to do an embedded iteration based on contract mapping theorem. We can also use $v_{\pi_k}=(I-\gamma P_{\pi_k})^{-1}r_{\pi_k}$ and find the inverse of matrix. But it is not recommended.

$$

v_{\pi_k}^{(j+1)} = r_{\pi_k} + \gamma P_{\pi_k}v_{\pi_k}^{(j)}, \quad j = 0, 1, 2, \cdots

$$

Mathematically, there will be infinite steps to obtain the convergence. However, we can artificially set a threshold for $||v_{\pi_k}^{(j+1)} - v_{\pi_k}^{(j)}||$ or the maximum steps for $j$.

Why is $\pi_{k+1}$ better than $\pi_k$?

$$

\because \pi_{k+1} = arg\max_\pi(r_\pi + \gamma P_\pi v_{\pi_k})

$$

$$

\therefore v_{\pi_{k+1}}(s) \geq v_{\pi_k}(s) , \quad \text{for all}\quad s

$$

The prove is that since $v_{\pi_{k+1}}$ and $v_{\pi}$ are states values, they satisfy the Bellman Equation.

$$

v_{\pi_{k+1}} = r_{\pi_{k+1}} + \gamma P_{\pi_{k+1}} v_{\pi_{k+1}}

$$

$$

v_{\pi_{k}} = r_{\pi_{k}} + \gamma P_{\pi_{k}} v_{\pi_{k}}

$$

Because equation (15), we know that $r_{\pi_{k+1}} + \gamma P_{\pi_{k+1}} v_{\pi_{k}} > r_{\pi_{k}} + \gamma P_{\pi_{k}} v_{\pi_{k}}$. Therefore

$$

v_{\pi_{k}} - v_{\pi_{k+1}}= (r_{\pi_{k}} + \gamma P_{\pi_{k}} v_{\pi_{k}}) - (r_{\pi_{k+1}} + \gamma P_{\pi_{k+1}} v_{\pi_{k+1}}) \leq (r_{\pi_{k+1}} + \gamma P_{\pi_{k+1}} v_{\pi_{k}}) - (r_{\pi_{k+1}} + \gamma P_{\pi_{k+1}} v_{\pi_{k+1}}) = \gamma P_{\pi_{k+1}}(v_{\pi_k} - v_{\pi_{k+1}})

$$

Thus, $v_{\pi_{k}} - v_{\pi_{k+1}} \leq \lim_{n\rightarrow \infty} \gamma^n P_{\pi_{k+1}}^n(v_{\pi_{k}} - v_{\pi_{k+1}})=0$

Since the policy iteration contains an embedded iterations, its convergence will be faster than value iteration.

Algorithm

Initialization: The system model, $p(r|s, a)$ and $p(s’|s,a)$ for all $s, a$ is known. Initial guess $\pi_0$

Goal: Search for optimal state value and an opitmal policy.

Loop: While $v_{\pi_k}$ has not converged, for the $k$ th iteration, do

Policy Evaluation:

Initialization: an arbitrary initial guess $v_{\pi_k}^{(0)}$

While $v_{\pi_k}^{(j)}$ has not converged, for the $j$ th iteration, do

- For every state:

- $v_{\pi_k}^{(j+1)}(s) = \sum_a \pi_k(a|s) [\sum_r p(r|s, a)r + \gamma\sum_{s’}p(s’|s, a)v_{\pi_k}^{(j)}(s’)]$

Policy Improvement:

For every state $s \in \mathcal{S}$, do:

- For every action $a \in \mathcal A$, do:

- Calculate the action value:

- $q_{\pi_k}(s, a) = \sum_r p(r|s, a)r + \gamma\sum_{s’}p(s’|s, a)v_{\pi_k}(s’)$

- $a_k^*(s) = arg\max_a q_{\pi_k} (s, a)$

- $\pi_{k+1}(a|s) = 1$ if $a = a^*_k$ and $\pi_{k+1}(a|s)=0$ otherwise.

- For every action $a \in \mathcal A$, do:

In the numerical experiment,

- we can find that the states that are close to the target area can find the optimal policy faster than those far away.

- we can also find that the states that are closed to the target area have larger state values.

Truncated Policy Iteration

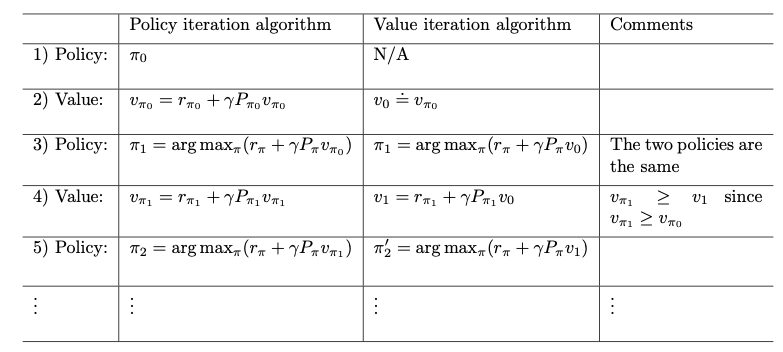

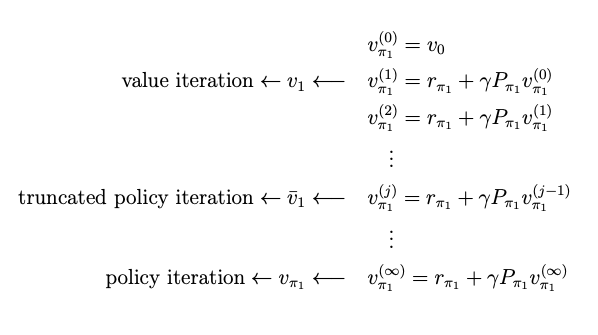

It is the unified version of Value Iteration and Policy Iteration.

- If the embedded iteration is only one-step instead of infinite steps then the policy iteration will be degraded to value iteration.

- If the embedded iteration is finite steps instead of infinite steps then the policy iteration will be degraded to truncated policy iteration.